Two different methods have been proposed that combine eligibility traces and

Q-learning; we call them Watkins's Q(![]() ) and Peng's Q(

) and Peng's Q(![]() ), after the researchers who first proposed them. First we describe

Watkins's Q(

), after the researchers who first proposed them. First we describe

Watkins's Q(![]() ).

).

Recall that Q-learning is an off-policy method, meaning that the

policy learned about need not be the same as the one used to select actions. In

particular, Q-learning learns about the greedy policy while it typically follows a

policy involving exploratory actions--occasional selections of actions that are

suboptimal according to

![]() . Because of this, special care is required when introducing eligibility

traces.

. Because of this, special care is required when introducing eligibility

traces.

Suppose we are backing up the state-action pair ![]() at time

at time ![]() . Suppose that on the next two time steps the agent

selects the greedy action, but on the third, at time

. Suppose that on the next two time steps the agent

selects the greedy action, but on the third, at time ![]() , the agent selects

an exploratory, nongreedy action. In learning about the value of the greedy

policy at

, the agent selects

an exploratory, nongreedy action. In learning about the value of the greedy

policy at ![]() we can use subsequent experience only as long as the

greedy policy is being followed. Thus, we can use the one-step and two-step

returns, but not, in this case, the three-step return. The

we can use subsequent experience only as long as the

greedy policy is being followed. Thus, we can use the one-step and two-step

returns, but not, in this case, the three-step return. The ![]() -step returns

for all

-step returns

for all ![]() no longer have any necessary relationship to the greedy

policy.

no longer have any necessary relationship to the greedy

policy.

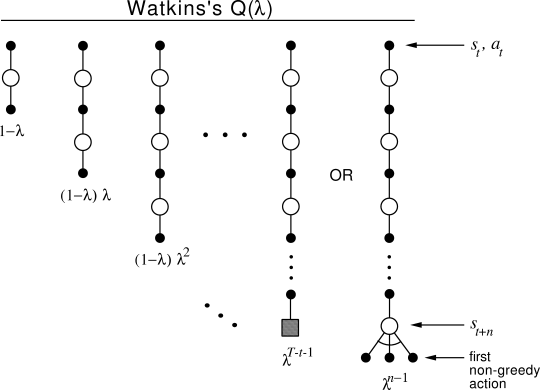

Thus, unlike TD(![]() ) or Sarsa(

) or Sarsa(![]() ), Watkins's Q(

), Watkins's Q(![]() ) does not look ahead all the

way to the end of the episode in its backup. It only looks ahead

as far as the next exploratory action. Aside from this difference, however,

Watkins's Q(

) does not look ahead all the

way to the end of the episode in its backup. It only looks ahead

as far as the next exploratory action. Aside from this difference, however,

Watkins's Q(![]() ) is much like TD(

) is much like TD(![]() ) and Sarsa(

) and Sarsa(![]() ). Their lookahead

stops at episode's end, whereas Q(

). Their lookahead

stops at episode's end, whereas Q(![]() )'s lookahead stops at the first exploratory

action, or at episode's end if there are no exploratory actions before that.

Actually, to be more precise, one-step Q-learning and Watkins's Q(

)'s lookahead stops at the first exploratory

action, or at episode's end if there are no exploratory actions before that.

Actually, to be more precise, one-step Q-learning and Watkins's Q(![]() ) both look

one action past the first exploration, using their knowledge of the

action values. For example, suppose the first action,

) both look

one action past the first exploration, using their knowledge of the

action values. For example, suppose the first action, ![]() , is

exploratory. Watkins's Q(

, is

exploratory. Watkins's Q(![]() ) would still do the one-step update of

) would still do the one-step update of

![]() toward

toward

![]() . In general, if

. In general, if ![]() is the

first exploratory action, then the longest backup is toward

is the

first exploratory action, then the longest backup is toward

|

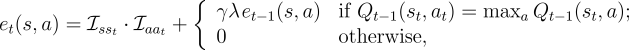

The mechanistic or backward view of Watkins's Q(![]() ) is also

very simple. Eligibility traces are used just as in Sarsa(

) is also

very simple. Eligibility traces are used just as in Sarsa(![]() ), except that they are

set to zero whenever an exploratory (nongreedy) action is taken. The trace

update is best thought of as occurring in two steps. First, the traces for all

state-action pairs are either decayed by

), except that they are

set to zero whenever an exploratory (nongreedy) action is taken. The trace

update is best thought of as occurring in two steps. First, the traces for all

state-action pairs are either decayed by ![]() or, if an exploratory

action was taken, set to

or, if an exploratory

action was taken, set to ![]() . Second, the trace corresponding to the current state

and action is incremented by

. Second, the trace corresponding to the current state

and action is incremented by ![]() . The overall result is

. The overall result is

|

Unfortunately, cutting off traces every time an exploratory action

is taken loses much of the advantage of using eligibility traces. If

exploratory actions are frequent, as they often are early in learning, then

only rarely will backups of more than one or two steps be done, and learning may

be little faster than one-step Q-learning. Peng's Q(![]() ) is an alternate version

of

Q(

) is an alternate version

of

Q(![]() ) meant to remedy this. Peng's Q(

) meant to remedy this. Peng's Q(![]() ) can be thought of as a hybrid of

Sarsa(

) can be thought of as a hybrid of

Sarsa(![]() ) and Watkins's Q(

) and Watkins's Q(![]() ).

).

Conceptually, Peng's Q(![]() ) uses the mixture of backups shown

in Figure

7.15. Unlike Q-learning,

there is no distinction between exploratory and greedy

actions. Each component backup is over many steps of

actual experiences, and all but the last are capped by a final

maximization over actions. The component backups, then, are

neither on-policy nor off-policy. The earlier

transitions of each are on-policy, whereas the last (fictitious)

transition uses the greedy policy. As a

consequence, for a fixed nongreedy policy,

) uses the mixture of backups shown

in Figure

7.15. Unlike Q-learning,

there is no distinction between exploratory and greedy

actions. Each component backup is over many steps of

actual experiences, and all but the last are capped by a final

maximization over actions. The component backups, then, are

neither on-policy nor off-policy. The earlier

transitions of each are on-policy, whereas the last (fictitious)

transition uses the greedy policy. As a

consequence, for a fixed nongreedy policy, ![]() converges to neither

converges to neither ![]() nor

nor ![]() under Peng's Q(

under Peng's Q(![]() ), but to some hybrid of the

two. However, if the policy is gradually made more greedy, then the method may

still converge to

), but to some hybrid of the

two. However, if the policy is gradually made more greedy, then the method may

still converge to ![]() . As of this writing this has

not yet been proved. Nevertheless, the method performs

well empirically. Most studies have shown it

performing significantly better than Watkins's Q(

. As of this writing this has

not yet been proved. Nevertheless, the method performs

well empirically. Most studies have shown it

performing significantly better than Watkins's Q(![]() ) and

almost as well as Sarsa(

) and

almost as well as Sarsa(![]() ).

).

On the other hand, Peng's Q(![]() ) cannot be implemented as simply as Watkins's

Q(

) cannot be implemented as simply as Watkins's

Q(![]() ). For a complete description of the needed implementation, see Peng and

Williams (1994, 1996). One could

imagine yet a third version of

Q(

). For a complete description of the needed implementation, see Peng and

Williams (1994, 1996). One could

imagine yet a third version of

Q(![]() ), let us call it naive Q(

), let us call it naive Q(![]() ), that is just like Watkins's Q(

), that is just like Watkins's Q(![]() ) except

that the traces are not set to zero on exploratory actions. This method might

have some of the advantages of Peng's Q(

) except

that the traces are not set to zero on exploratory actions. This method might

have some of the advantages of Peng's Q(![]() ), but without the

complex implementation. We know of no experience with this method, but

perhaps it is not as naive as one might at first suppose.

), but without the

complex implementation. We know of no experience with this method, but

perhaps it is not as naive as one might at first suppose.